Understanding AI: The Most Overhyped Yet Underestimated Technology of Our Time

When Everyone's an Expert on Something Nobody Really Understands

Picture this: I'm sitting in yet another business meet-up, and the word "AI" is flying around the room with the frequency of Samuel L. Jackson's favourite expletive in Pulp Fiction. Everyone's confidently discussing their "AI-powered" solutions, their "AI strategies," and their plans for "AI transformation."

The fascinating paradox? Most people in that room, myself included at times, have only a surface-level understanding of what artificial intelligence actually does. How do I know this? Because the field is evolving so rapidly—with new releases from Anthropic, Google, OpenAI dropping almost weekly—that even researchers struggle to keep up with the implications of each advancement.

Yet somehow, we've all become instant experts. More concerning, we've collectively embraced a narrative about AI that's simultaneously too pessimistic and too optimistic, missing the nuanced reality of what this technology represents for human progress.

The Tale of Two Perceptions

Think about how we typically encounter stories about AI in media and business discussions. On one side, you hear breathless excitement about AI solving every problem imaginable, from climate change to traffic congestion to finding your perfect soulmate. On the other side, there's existential dread about AI rendering entire professions obsolete, creating mass unemployment, or worse.

Both perspectives share a fundamental flaw: they treat AI as magic rather than as sophisticated engineering. This reminds me of that Bollywood film Drishyam, where the protagonist demonstrates how powerfully human perception can be shaped, creating consequences that extend far beyond the original reality. We're living through our own version of this effect, where the stories we tell about AI become more influential than the technology itself.

Having experienced similar misconceptions about technology across different cultures—from India to the UK—I've realized this isn't a regional phenomenon. It's a human tendency to mythologize what we don't fully understand, especially when that thing promises to change everything.

What AI Actually Is (A More Honest Explanation)

Let me share a different way to think about artificial intelligence that might help cut through the mystique. Imagine you had access to an impossibly well-read research assistant who could instantly recall patterns from millions of books, articles, conversations, and documents. This assistant could quickly identify connections between ideas, suggest what typically follows certain kinds of questions, and even generate new combinations of existing concepts.

However, this research assistant has some peculiar limitations. They can only work with patterns they've seen before, they can't truly understand context the way humans do, and they have no ability to imagine genuinely novel solutions or question fundamental assumptions about the problems they're helping solve.

Current AI systems function much like this hypothetical assistant. They're extraordinarily sophisticated pattern-matching engines that can process vast amounts of information and generate responses based on statistical relationships in their training data. This capability is genuinely impressive and genuinely useful, but it's fundamentally different from human intelligence in crucial ways.

When you interact with an AI language model, it's essentially performing complex probability calculations to determine what words or ideas are most likely to come next based on the patterns it learned during training. It doesn't "understand" in the human sense—it recognizes and reproduces patterns with remarkable accuracy.

The Pattern Recognition Revelation

Understanding AI as pattern recognition helps explain both its strengths and limitations more clearly. Consider how humans learn to drive a car versus how an AI system might learn the same task. Humans combine direct instruction, observation, practice, intuition, and real-time adaptation to new situations. We understand the purpose of driving, the social contract of sharing roads, and can make split-second ethical decisions in novel scenarios.

An AI system learning to drive analyzes thousands of examples of driving behavior, identifies patterns in successful navigation, and learns to reproduce those patterns in similar situations. It can become extremely proficient at this task, potentially even safer than human drivers in many circumstances. But it's operating through pattern matching rather than genuine understanding of the purpose, social context, or ethical dimensions of driving.

This distinction matters because it helps us understand where AI excels and where human involvement remains not just valuable but essential. AI systems excel at tasks involving pattern recognition at scale, consistency in applying learned rules, and processing information faster than humans can manage. Humans excel at tasks requiring genuine creativity, ethical reasoning, contextual judgment, and the ability to imagine solutions that don't exist in any training data.

The Economics Behind the Myths

Now let's explore why the conversation around AI has become so polarized and often misleading. There's an economic dimension to how AI narratives spread that rarely gets discussed openly but significantly shapes public understanding.

Consider the incentive structures in technology investment. When entrepreneurs pitch AI solutions to investors, they're competing for attention in an environment where revolutionary claims get more funding than evolutionary improvements. If I tell you my technology can make knowledge workers fifteen percent more productive, that's valuable but not earth-shaking. If I claim it can eliminate the need for fifty percent of knowledge workers, suddenly we're discussing fundamental economic transformation.

This creates what economists call a narrative bubble, where the story becomes temporarily more important than the underlying reality. The challenge is that these narratives influence real decisions—career choices, educational investments, corporate strategies—based on projected rather than current capabilities.

The venture capital ecosystem, which has evolved from supporting long-term vision to optimizing for shorter-term returns, naturally amplifies these dramatic narratives. This isn't necessarily malicious, but it does explain why the public conversation about AI tends toward extremes rather than nuanced assessment of capabilities and limitations.

The Collaboration Revolution That Nobody Talks About

Here's where the conversation gets interesting and hopeful. While everyone's debating whether AI will replace humans, the most successful AI implementations are quietly demonstrating something completely different: human-AI collaboration produces better outcomes than either humans or AI working alone.

Think of AI tools as sophisticated thinking partners rather than potential replacements. When you use a calculator, you're not outsourcing mathematical thinking—you're freeing your cognitive resources to focus on problem formulation, interpretation of results, and application of insights. AI tools can function similarly across a much broader range of intellectual tasks.

Consider how this works in practice. When I use AI to help structure my thoughts for this article, I'm not having the AI write my ideas—I'm using it as a thinking partner that can quickly process information, suggest organizational approaches, and help me identify gaps in my reasoning. The creativity, judgment, and final decision-making remain distinctly human contributions.

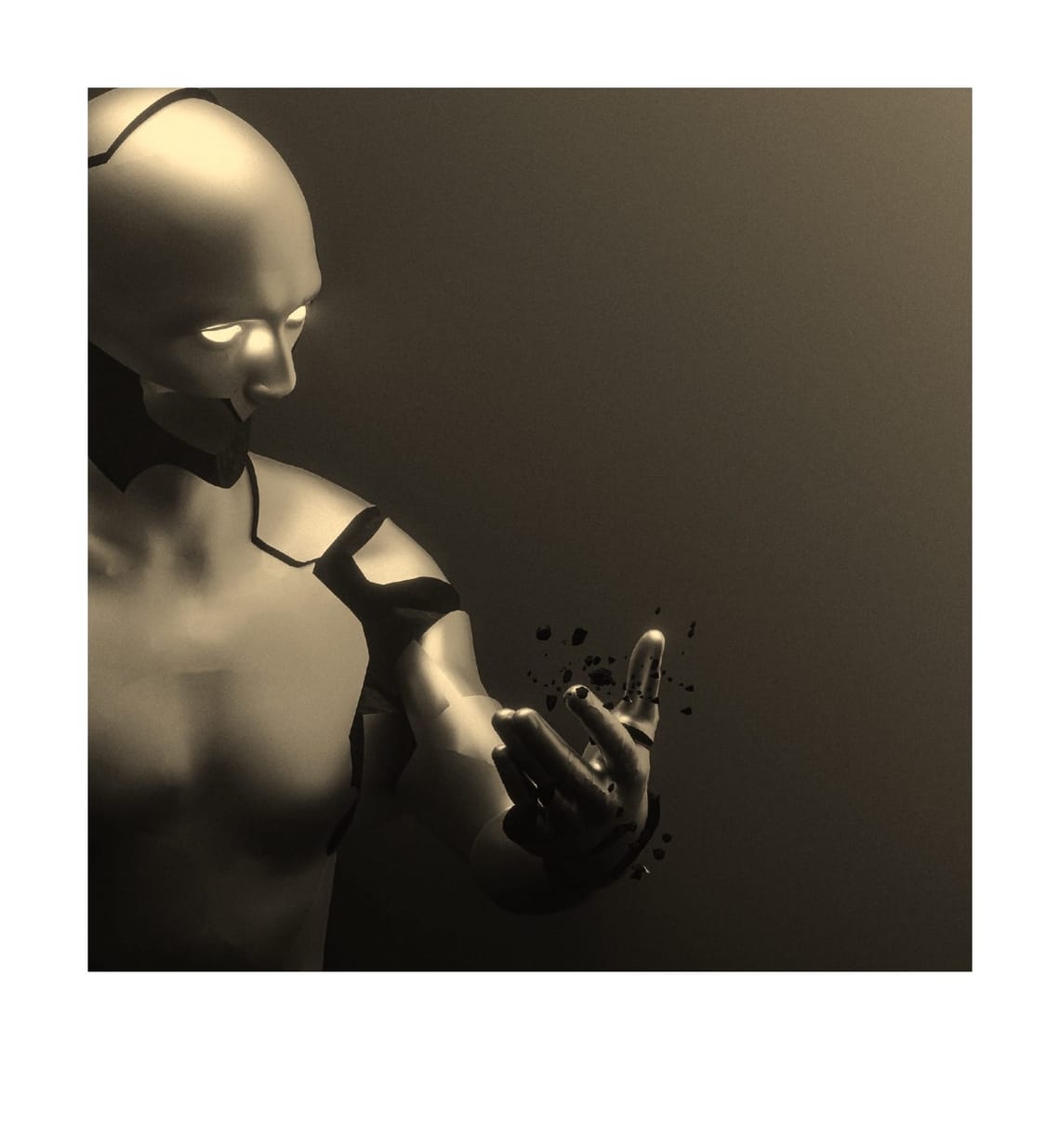

This collaborative approach explains why AI is often called a "second brain." A second brain doesn't replace your primary brain—it extends its capabilities in specific directions. Your first brain maintains responsibility for goals, values, creativity, and contextual judgment, while your second brain handles information processing, pattern identification, and initial analysis of possibilities.

Understanding the Limits Through Honest Assessment

To develop realistic expectations about AI capabilities, it helps to understand where current systems consistently struggle. These limitations aren't temporary technical problems waiting to be solved—they're fundamental to how these systems work.

AI systems struggle with genuine novelty. They can recombine existing patterns in impressive ways, but they cannot imagine possibilities that exist completely outside their training data. They cannot question the fundamental assumptions built into the problems they're asked to solve. They cannot bring ethical reasoning or contextual judgment that goes beyond what they've seen in their training examples.

Perhaps most importantly, AI systems lack intentionality. They don't want things, don't have purposes beyond responding to prompts, and don't make decisions based on values or long-term consequences. These aren't bugs to be fixed—they're features of how these systems work.

Human intelligence involves not just pattern recognition but also creativity, intentionality, ethical reasoning, and the ability to imagine and work toward futures that don't currently exist. These capabilities aren't just nice additions to pattern recognition—they're qualitatively different types of intelligence that remain uniquely human.

The Future of Human-AI Partnership

So what does a realistic future of human-AI collaboration look like? Instead of replacement scenarios, imagine enhancement scenarios where AI tools amplify distinctly human capabilities while handling routine information processing tasks.

In creative work, AI might help generate initial ideas or variations, but humans provide the creative vision, aesthetic judgment, and emotional resonance. In analytical work, AI might process large datasets and identify patterns, but humans formulate the questions, interpret significance, and make decisions based on incomplete information and competing values.

In strategic work, AI might model scenarios and predict consequences, but humans set goals, navigate competing interests, and make ethical choices about trade-offs. In social work, AI might help organize information and suggest approaches, but humans provide empathy, build relationships, and understand cultural context.

This collaborative future requires developing new skills rather than fearing obsolescence. The most valuable future skills likely include learning to work effectively with AI tools while contributing capabilities that remain uniquely human—creative problem-solving, ethical reasoning, strategic thinking, and the ability to imagine and work toward possibilities that don't currently exist.

Responsible Innovation in an Irresponsible Narrative Environment

As an engineer who genuinely loves innovation, I want to address the responsibility dimension of AI development and deployment. Every powerful technology throughout history has been capable of both beneficial and harmful applications. Nuclear physics gave us both clean energy and weapons. The internet gave us unprecedented access to information and unprecedented surveillance capabilities. Social media promised global connection but also delivered isolation and misinformation at scale.

The pattern is consistent: the harmful applications of powerful technologies are often easier to implement than beneficial ones. This makes the current approach to AI development—where replacement narratives dominate over collaboration narratives—particularly concerning.

Responsible innovation means asking not just "Can we build this?" but "Should we build this, and how do we build it in ways that support rather than undermine human flourishing?" It means designing systems that preserve human agency rather than eliminating it, that augment human capabilities rather than replacing them.

The sustainability question is also crucial. Current AI systems require enormous computational resources to train and operate. If these systems are genuinely valuable, they should be priced accordingly rather than given away cheaply while simultaneously threatening the livelihoods of the people they're supposed to serve. The economics need to support both technological development and human welfare.

Reframing the Conversation

Perhaps the most important shift we need is moving from competitive to collaborative framing of human-AI relationships. Instead of asking "What can AI do that humans can't?" we might ask "How can AI help humans do things they couldn't do alone?"

Instead of "Will AI replace human workers?" we might ask "How can AI tools help human workers become more effective, creative, and focused on work that requires genuine human intelligence?"

Instead of "How do we prepare for AI disruption?" we might ask "How do we develop AI systems that support human goals and values while solving problems we couldn't tackle without computational assistance?"

This reframing changes everything about how we approach AI development, deployment, and integration into human work and social systems. It shifts focus from replacement to augmentation, from competition to collaboration, from disruption to enhancement.

The Path Forward

The future of artificial intelligence isn't predetermined by technological capabilities alone—it's being shaped by the choices we make about how to develop, deploy, and integrate these systems into human society. Those choices are influenced by how we understand and discuss AI capabilities, limitations, and potential applications.

The replacement narrative serves narrow economic interests but doesn't serve broader human welfare or even long-term economic productivity. The collaboration narrative is more complex but also more realistic and more supportive of both technological innovation and human flourishing.

The most successful AI implementations maintain human agency while leveraging computational power. They don't eliminate human judgment but support it with better information processing. They don't replace human creativity but provide tools for exploring and developing creative ideas more effectively.

Understanding AI as sophisticated pattern recognition with specific strengths and limitations helps us develop more realistic expectations and more effective applications. Recognizing the economic forces that shape AI narratives helps us evaluate claims more critically. Focusing on collaboration rather than replacement helps us build systems that actually serve human needs and goals.

Why This Matters Now

We're at a crucial moment in the development of AI technology. The basic capabilities have been demonstrated, but the social and economic frameworks for deploying these capabilities are still being established. The narratives we embrace now will influence policy decisions, educational priorities, career choices, and investment patterns for years to come.

If we continue down the path of replacement narratives, we'll likely see AI development that prioritizes eliminating human involvement rather than enhancing human capabilities. If we shift toward collaboration narratives, we're more likely to see AI development that creates genuine value while preserving human agency and dignity.

The choice isn't between embracing AI uncritically and rejecting it entirely. The choice is between thoughtful, human-centered development and deployment versus technology-driven disruption that treats human welfare as secondary to efficiency metrics.

Your second brain isn't plotting against your first brain. It's waiting to help you think more effectively, create more ambitiously, and solve problems you couldn't handle alone. But that collaborative future requires understanding what AI actually is and isn't, rather than accepting oversimplified narratives about replacement or revolution.

The conversation about AI's role in human society is just beginning, but it's beginning with a lot of misconceptions that need clearing up. Understanding the technology, the economics, and the human factors involved is the first step toward building the future we actually want to live in rather than the one we're being told is inevitable.

After all, in a world where AI handles pattern recognition at scale, the uniquely human capabilities of creativity, ethical reasoning, and collaborative problem-solving become more valuable, not less. The question isn't whether you'll be replaced by AI—the question is how effectively you'll learn to work with AI to accomplish things neither of you could manage alone.